State Space

Weiss Ch. 10.5. (not really...)

Weiss's title is "Backtracking Algorithms". For me, backtracking is a

particular, memory-saving implementation of depth-first graph search.

His game-playing example is not exactly backtracking. So let's take

him to mean "Graph Search", which is a highly practical and basic idea

with many implementations and ideas running around, especially driven

by artificial intelligence.

Often a problem or a situation is modeled computationally or

mathematically by a state. E.g. quantum state, state vector of

a rocket: orientation, location, speed as in

(A, B, C, X,Y,Z, X', Y', Z'), location of pieces on a chess-board, location

of a salesman on a map, etc. In the relevant domain, an

operator changes the state (pushes a pawn, accelerates, goes to

next city...).

The abstract, often infinite, set of all states, including the connections

between them induced by operators, is the

state space of a problem. A (very) early and

still-central AI model of human problem solving uses the ideas so

far, along with an

initial state for the problem and a

goal state to be achieved.

E.g. SAT: initial state is a set of unassigned variables.

There's a data base of

logical clauses, and

goal state is a set of variable assignments (P is T, Q is F)

that satisfies the

clauses. Or there's an initial stacking of blocks and the goal is

another such configuration. Etc.

SFS: You're a robot moving located on a discrete grid sitting on a

NSEW

world coordinate system. You have two

operators: "move one N" and "move one E". Draw the state space

(also show the operators that transform states).

Problem Solving

Problem solving is the process of navigating the state space (graph) by

applying an operator to the current state, in search of the goal.

The state space is the totality of possibilities, and the

search results in a search tree, which to an

observer looks like a tree of states that grows with exploration: it

has the initial state as root and the hope is to find the goal, which

would be a leaf. The path from root to goal is the sequence of

operations needed to achieve the goal (but sometimes we don't care how we

did it.)

Usually (and for us) the search tree is not an explicit data

structure.

It is implicit in the recursive-calling structure of a BFS or DFS

variant.

The "state of the node" is actually preserved in the local variables

of one invocation of the search program, which itself figures out the

successors of its argument state given the available operators

and recursively calls itself to explore them. If its argument is

actually a goal state, it can declare victory and quit, or return up

the calling tree along the successful search path. This is good for

us... the programming language manages our tree for us.

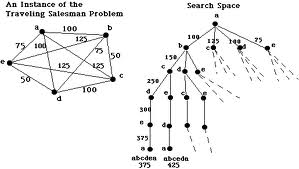

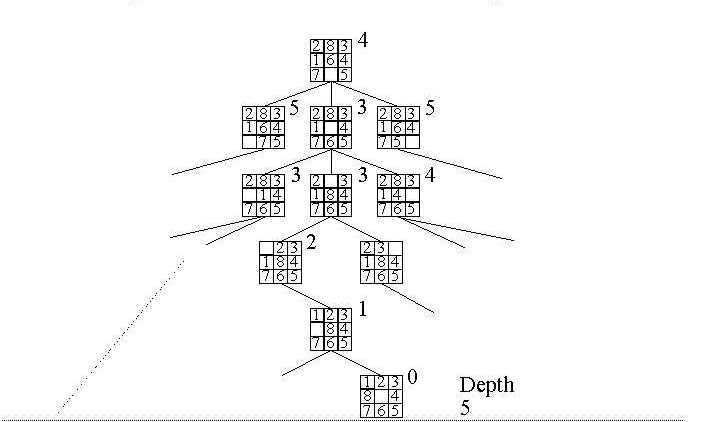

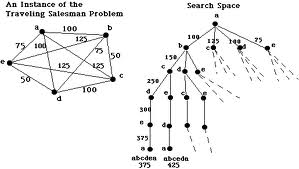

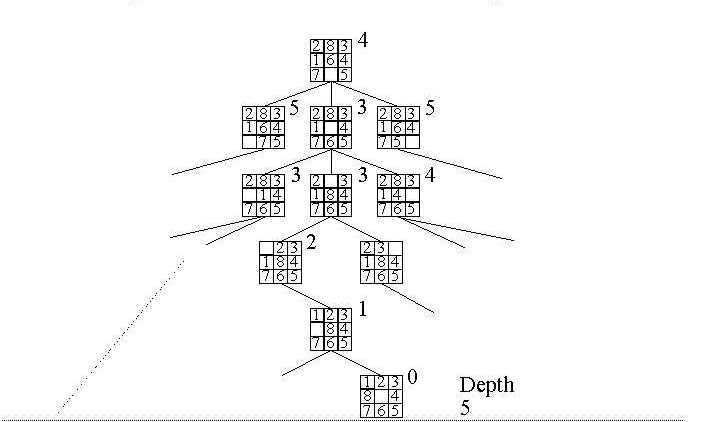

Examples: TSP state space and search tree

8-puzzle search tree

Parsing a sentence as search

State Space Search Representation

First, formalize at the appropriate level: usually, for this sort of

problem, what any normal non-wise-ass 10 year old would do.

In a problem about pouring

water from jugs to get some even number of gallons, we don't worry

about

evaporation. We don't consider the operation of pouring a random

amount, or pouring a fractional amount. Operations complete

successfully, etc.

The formalism interacts with the representations, especially with the

state. From the state we need to be able quickly to generate

successors that result from

applying operations, and to check conditions like "is this

a goal state?", and to compute the "goodness" of a state (often

an approximation to how close to the goal we are) or its "badness"

(e.g. the cost so far to get there). We need good representations.

Three Representations

State: As simple and efficient as possible. Vector, list, etc

is good. Worth some thought... E.g. NxN array sucks for N-queens.

Tree Node: Probably a good idea to encapsulate the state along with

other ancillary information like parent pointers, level of this node

in the search, costs and goodness measures....

Search Tree: For some problems need to extract path from

initial to goal state. That would imply for BFS (coming up soon) that the tree nodes

on the queue have parent pointers. In DFS the tree is in the calling

sequence, so constructing the path needs to keep some

information (the state or the operator used)

from every level when returning from goal.

State Space Search Control: Weak Methods

Weak search methods ignore effort-saving sanity checks and knowledge

about

the domain.

Search trees are often immense (Chess, say) and exponentially-behaved

Operations = (branching factor)levels.

AI researchers were originally

interested in the human side of problem-solving, so wondered how we

achieve the effect of "brute force" exponential search.

We have several choices in searching a state-space (graph), with

predictable trade-offs. This is CSC 242 material, good book is

Artificial Intelligence: a Modern Approach (3rd ed.), Russell and

Norvig.

Depth-first search tends to generate deep skinny trees. It needs minimal

(linear in depth)

working memory (for generated sucessors and recursive calls) but can

dive down to infinity. Given a node, DFS checks for end

conditions (e.g. found goal, at max. allowed depth) if it's done it returns

status (eg success or suspended).

Else it generates successors: if it calls self as each is

generated, that is technically backtracking. If it generates

and saves them all at once, then works thru them that's just DFS.

Breadth-first search generates maximally-bushy and minimally

deep

searches. It uses iteration and a queue, not recursion. It is exponentially

expensive in working memory (active nodes). Conservative: does not miss any

possibilities at any depth, but explores all successors to all nodes

at the current depth before looking deeper (forward) into the search.

Depth-limited search is a variant of DFS or BFS to control

its depth and so avoid infinite searches. So we'd talk about "DFS of

depth 5", say.

Uniform-cost search is BFS based on cost; it is BFS if

the modelled cost of applying operators is uniform. BFS is not recursive, it's an iteration until some

stopping condition (depth limit, find goal). Each iteration takes a

state off a single "job queue", checks if it can stop, else generates its

successors,

puts them on the queue, and iterates.

Bi-directional search: We know the goal, and the start, so why

not search both directions? May be possible or useful depending on

the problem.

Iterative deepening combines characteristics of

DFS and BFS at a small multiple of

the cost. Half the nodes in a

binary tree are at the leaves, so it's not that expensive to

do DFS to level N, then just repeat the DFS to level N+1, etc. BFS

conservative

behavior

with DFS memory cost.

State Space Search Control: Heuristic Search

Why not

take advantage of general principles or domain-knowledge to prune

the search

tree, avoid predicatably useless work?

There are universal principles that don't compromise finding the

goal (e.g. avoid loops, α-β pruning (for games), guarantee some motion

by avoiding useless operators).

Then there are

domain-dependent principles: possibly an operator has

a cost , so we're into minimum-cost search. Last,

knowledge or dictates from the domain may give heuristics, or

rules of thumb, to guide the search ("don't develop your queen before

your knights", "don't draw to an inside straight", "place big pieces

before smaller ones", etc.). Operator cost and

perceived goodness (from heuristics or other metrics) may be combined

into a heuristic, best-first search scheme beloved of AI

people. The A* best-first algorithm is the canonical heuristic search --

it has good properties: uses domain knowledge, prunes the tree, but

it's guaranteed to find the optimal

path to the goal.

j

Games and State Space Search

Weiss presents the domain of games for graph search. Lots of fun,

rather trendy perhaps. I'm reluctant to pursue the topic

because of the more

complex nature of the abstraction (two opposing players).

In 172, we don't need problems that fight back.

Usually games use heuristic search in which the game position (state)

is assigned a

value (say it's positive for White), with the assumption that what's good for

White is exactly that bad for Black, and vice-versa (they're zero-sum). Thus when

players take turns, they are trying to push the value of the

position in opposite directions. This leads to minimax search,

of early game theory fame (Von Neumann and Morgenstern). White tries

to maximize his goodness, Black to minimize it, and they take turns

doing that.

Minimax allows another famous general pruning principle called

α-β pruning. It says, in effect: "You'd be crazy to

explore this line of play because you can tell right now he'll never

make the move that allows it-- he can see it's too dangerous, he can

do better, etc.".

Examples and Laboratory Assignments

For all these problems, we have a "state" describing the situation

(set of variables with values, values in an array,...)

Also an initial and (set of) goal state(s). The search tree

may be made of nodes that gather together useful auxiliary information

about a state.

The basic

routine to process node in a search:

- Use hash table to check if this state has already been created and

visited (it's in the "explored set" or "closed list").

If so, return.

-

If not, if it's a goal state, declare success and return.

-

Else generate sucessor nodes by applying legal operators to the

current state to get successor nodes. Call self on each (for DFS) or

enqueue them (for BFS), in some order.

-

Use costs, heuristics, state goodness measures, to rank successors and

pick the best-looking to expand next.

Finally, present the relevant answer to the user: a sequence of operations, a

final state, failure, whatever.

Examples and Laboratory Assignments

The 8 Puzzle

We saw this one's partial search tree above. Push tiles to rearrange

8 tiles in 3x3 frame with a space, transforming initial to goal state.

Best to formalize the single operation as "move the space". A state has

various numbers of successors depending on where the space is.

I don't know best representation but a 3x3 array doesn't sound crazy.

As I recall, only half the state space is reachable from an initial

state, so if you're going for

1 2 3

4 b 5

6 7 8

from a random

starting state it'd be impossible half the time.

Clearly an infinite space, so raw DFS dangerous. An inverse "goodness" is easy

to come up with though: "How many tile moves to a solution?" Here the

fewer the better.

Important: Admissible heuristics

do not over estimate the work to find a solution: One can sometimes

think of them as the work for an easier version of the problem.

The idea is to estimate the real work as closely as possible: but if

it's

over-estimated, there's a chance that a sub-optimal solution that

(mistakenly) looked easier will be found first.

Two admissible heuristics:

H1. The number of misplaced tiles, or better,

H2. the sum of all Manhattan (= city-block metric, = sum of row+col diffs)

distances of the tiles from their goal position.

H2. is better since it more accurately (under)-estimates the work

involved,

thus does more pruning than H1 without possibly pruning away the

optimal path to the goal.

Admissible Heuristics

Initial ---> Final

1 2 3 b 8 7

4 5 6 6 5 4

7 8 b 3 2 1

H1: All 8 tiles out of place, so work at least 8

(teleports!). Underestimates by a lot, too optimistic to help much in pruning.

H2: Manhattan distance each tile must travel (right thru obstacle

tiles, so underestimates): 1,4: 2,2: 3,4: ... 5,0:...8,2 total is 20.

Actual work: spin the outer ring CCW 4 times = 28 moves.

Jugs

There are zillions of these puzzles out there, pretty much no two

alike. It seems the "typical assumptions" are: there is an infinite

source of water and a finite set of jugs of integer capacities, and

water can be poured back and forth, on the ground, etc. Achieve a

state

with specified quantities of water in the jugs.

Sort of a fun design process to minimize the representation and operations involved.

For any particular problem, one can have a "state" consisting of the

volumes in variables Jug1, jug2, ... jugN, say. The basic

routine to process a node:

Checks if this state has already been created and

visited (it's in the "explored set" or "closed list") -- use a hash table.

If not, if it's a goal state, declare success and quit.

Else

remember the state in local variable(s), then loop

thru all possible operators to generate successors and for each,

in some order, call self on the result.

For the general N-jug problem, don't want to have to name an arbitrary

number of variables, that's what arrays are for.

So the state is an N-vector of jug

volumes.

I added an N+1st jug of infinite capacity that

could serve as faucet and drain both.

Then it seems we only need one

operation, "pour from jug A into B", which only has one interesting

case, the rest (fill from and dump to resevoir)

having easy effects on the current state. This is a pretty

significant and elegant

simplification, though I say it who shouldn't. Sign of a good rep.

if it simplfies your operators. Free to you.

In the "don't do useless work" department, the operator returns

success (and does nothing)

for:

1. A is empty

2. A = B (don't pour into yourself

3. B full already

Specific standard problems. Three jugs of 12, 8, and 3 gallons. Goal

is

one gallon in any jug. Easier: Two jugs of 5 and 3, your job is to

produce 4.

Find more if you like, shouldn't be hard. Make some up. Impress us.

I've

wondered (and the question should have occurred to you ;-})

how to answer this: "Given a set of jug sizes, and

you want to get X gallons, is it possible?" Better would be "For what

X is it possible?".

Slightly non-standard but easily formalizable with slightly modified

operators. You could automate Puzzle 3 and let your search program do

the optimizing...

Dividing The Waters.

Here's another couple:

Sharing water and

balsam

Etc. etc.

Examples and Laboratory Assignments

Missionary and Cannibals (Cannabis, Cannonballs)

3 missionaries and 3 cannibals are on one side of a river with a boat

that can hold 1 or 2 people. How to get everyone across without ever

leaving a group of missionaries outnumbered by cannibals on either side?

The boat can't cross with no one on board.

Very early AI paper (Amarel 1968).

Draw the complete state space (pretty simple). Write the program

finding the optimal solution.

What about N individuals in each tribe? Does a general method emerge from our

N=3 example?

Last update: 7.25.13