Good afternoon, and welcome to the Computer Science and Cognitive Science commencement ceremony. It’s a particular pleasure for me personally to be speaking today, because I’ve had so many of today’s graduates as students. It’s also a special pleasure to have so many parents and other family members present. You can be justifiably proud of what your sons and daughters have accomplished. On behalf of the entire faculty, please allow me to extend our heartfelt thanks for sharing these graduates with us, and for providing them with the emotional, spiritual, and financial support it takes to weather four years of college.

I’ve been with the department here since 1985. I was a bachelor’s recipient myself just over 22 years ago. It’s amazing how much has changed since then.

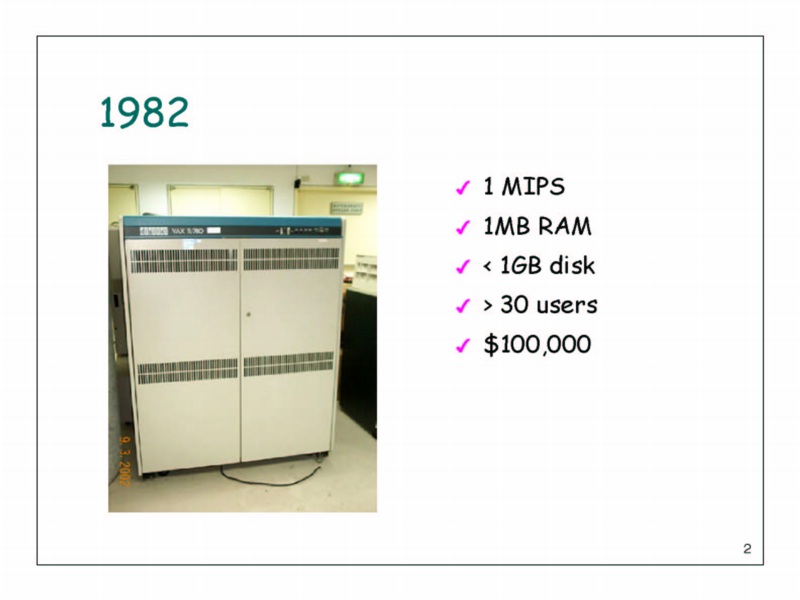

The VAX computer I used in grad school could do about a million arithmetic operations per second. It had about a megabyte of memory, and less than a gigabyte of disk space. Together with the disks, it was about the size of a minivan, and cost $100,000. I shared it with 30 other people.

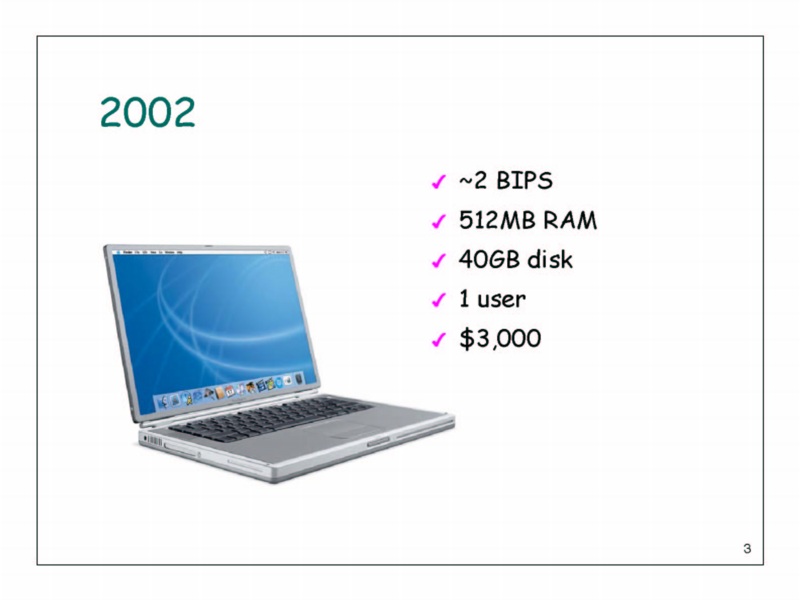

The computer with which I am presenting this talk—a one-year-old Apple Powerbook—has roughly 2,000X the computing power, 500X the memory, and 50X the disk space, and I’m the only person who uses it. It cost about 1/30th as much as the VAX, so that comes out about right.

This growth in technological capability is unheard of in other fields. Testifying before Congress in April of 1995, Ed Lazowska, chair of the Computer Science Department at the University of Washington, said

If, over the past 30 years, transportation technology had made the same progress as computing technology in size, cost, speed, and energy consumption, then an automobile would be the size of a toaster, cost $200, travel 100,000 miles per hour, and go 150,000 miles on a gallon of fuel. And in another 18 to 24 months, we’d realize another factor of two improvement!

That was 8 years ago. We’ve seen 5 of those factors of 2 since then.

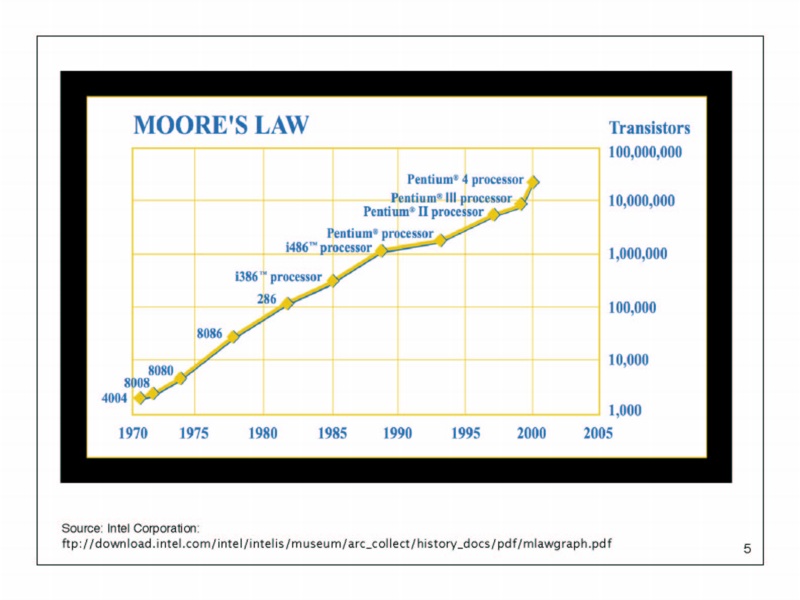

Most of you have probably heard of “Moore’s Law”. ’Way back in 1965, an engineer at Intel by the name of Gordon Moore observed that the number of transistors that could be packed onto a silicon chip was doubling roughly every 2 years, as we learned to etch smaller and smaller features with greater and greater accuracy.

Much to Moore’s surprise, his observation has now held true for 40 years. To some extent it’s been a self-fulfilling prophesy: the longer it seemed to hold, the more it gained the status of “law”, and the more engineers felt compelled to keep on making it true.

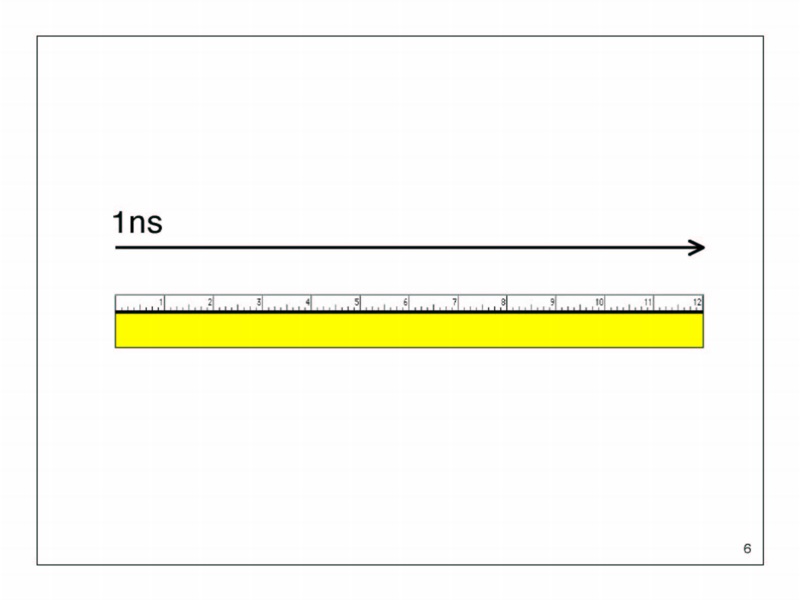

So why does smaller matter? Because of the speed of light.

In a vacuum you can propagate information at 30 billion centimeters—about a billion feet — per second. Signals in a copper wire propagate about half as fast, but close enough: if you want to move information from one place to another in less than a billionth of a second, those places had better be less than a foot apart. Since computers now perform several billion operations a second, and each operation requires moving information from one place to another dozens of times, miniaturization is crucial.

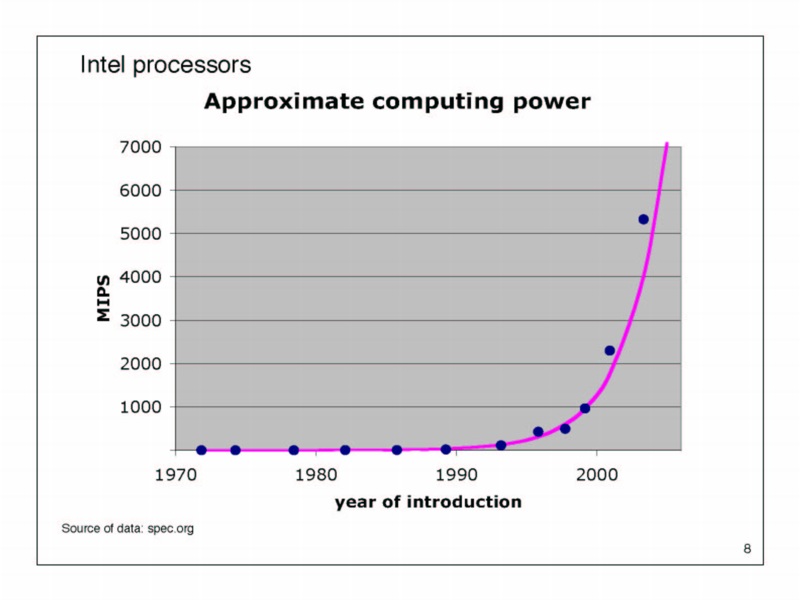

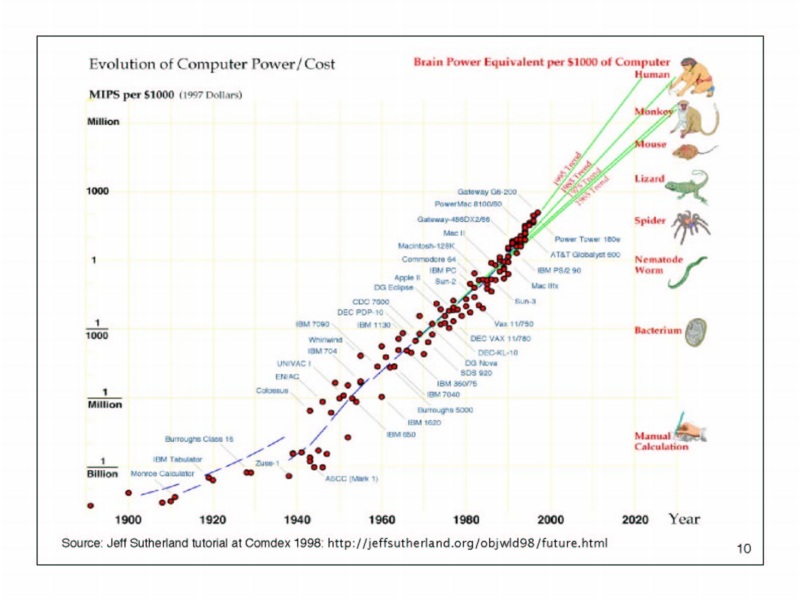

You may have noticed that the y axis on the Moore’s Law graph has a logarithmic scale. Each successive grid line is 10 times the density of the last. The miniaturization trend is particularly compelling when you translate this density into speed of computation, and plot it on a linear scale.

Like multiplying rabbits, this picture naturally raises the question: can this growth continue? There are some fundamental physical limits: We can’t etch features narrower than atoms, so at least for computers of the kind we’ve been building for 50 years, miniaturization has to stop eventually. Heat dissipation is also an increasing problem. A Pentium 4 dissipates about 75W from less than 2 square centimeters of silicon. That’s twice the heat density of a hot plate, and it’s getting worse every year. Cooling these beasts is becoming a major headache.

I don’t know anyone who thinks that Moore’s law will continue indefinitely, but conventional wisdom holds that we have another 10–20 years to go with foreseeable technology, and we can always hope (and work toward) more fundamental breakthroughs.

If we project forward, 2X growth every 18 months means 100-fold growth every decade. What could we do in 2013 with machines 100 times as powerful as what we have today? That would be a 200GHz laptop, with several Terabytes of disk. And given that, what could we do in 2023 with machines 10,000 times as powerful as what we have today?

If I knew the answers for sure I’d know where to invest my pension—but I can certainly make some guesses. For one thing, we’re going to see a lot more computing with video.

How many people have already seen the Matrix sequel? How many saw the original? It’s fun as science fiction, but its technology is also surprisingly close to reality. Within 10 years we will almost certainly be able to generate virtual worlds, in real time, that are visually indistinguishable from reality. Complete emulation of the other senses may take a little longer, but computer scientist and futurist Ray Kurzweil predicts that full-immersion virtual reality will be routine well before the middle of this century.

While today’s graduates are still in the early parts of their careers, we will be making routine use of computers that understand spoken English, and we’ll interact with increasingly “intelligent” applications that search out useful data, organize it for us, and provide advice on health care, financial planning, and a host of other activities.

In fact, if exponential growth continues, it’s reasonable to ask at what point computers will begin to surpass human beings not only in such “mechanical” operations as mathematical computation, but in the more subtle aspects of intelligence as well.

This chart plots the computational power of about 100 famous machines, again on a logarithmic scale, from the mechanical census tabulator of 1890 through the Pentium Pro of 1997. It also estimates the power of various biological systems. Those estimates are somewhat debatable, but one can make a good case that today’s personal computers are hovering around the mental capacity of a lizard, and will rival human beings by 2030 or so. Kurzweil envisions a future, starting somewhere in the 2030s, in which humans routinely merge their minds with artificially intelligent prostheses.

If that prospect makes you nervous, you are not alone.

As computing continues to pervade every aspect of human activity, more and more ethical questions will arise. Many are already critical today.

The original Matrix movie was released on March 31st, 1999. Three weeks later, Eric Harris and Dylan Klebold killed 13 fellow students and themselves, and injured 25 others, at Colorado’s Columbine High School. The connection, if any, between media and real-world violence is still hotly debated, but the prospect of frustrated high-schoolers playing full-immersion virtual Mortal Kombat and then heading out into the streets simply scares the heck out of me. We need to consider consequences.

If you computerize a hospital’s medical records, you probably save lives every day because you can access information more quickly. But if the hospital’s computer system fails you may lose a whole bunch of patients all at once if you don’t have paper backup. If you computerize the interstate banking system you may eliminate waste, and minimize the frequency of routine errors, but raise the possibility that a terrorist hacker will crash the entire US economy with one well-crafted virus. If you interconnect the databases of the FBI, local law enforcement, various other federal agencies, and the credit rating bureaus, you may be able to catch that hacker before disaster strikes, but you also make it possible for the next Joe McCarthy or J. Edgar Hoover to spy on Americans en masse, and stamp out political dissent.

Telemarketers can already buy a set of CDs describing every household in the country: names, incomes, magazine subscriptions, children’s ages and hobbies, credit card debt, charitable giving, favorite brand of soda pop, and a host of other “routine” information. None of this may be particularly sensitive in and of itself, but taken together, and collected in blanket form across large populations, it constitutes a paradigm shift in what we think of as public and as private. Scott McNeally, CEO of Sun Microsystems, has been quoted as saying “You have zero privacy anyway. Get over it.”

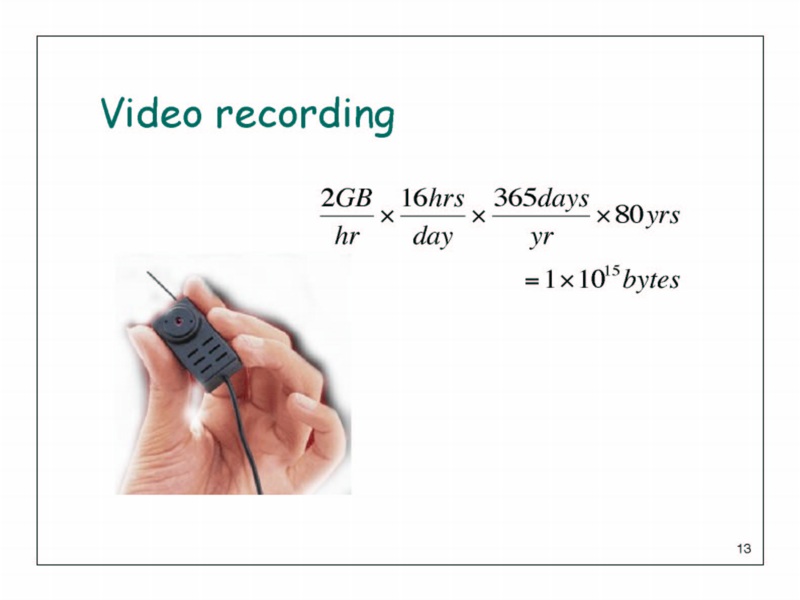

Consider this: at current compression rates, you could record your entire life—every waking minute, in DVD-quality video—in about 1 petabyte of data. At Moore’s Law rates of growth, that storage will cost about $60 20 years from now. Long before then people will be wearing miniature cameras on a routine basis, and recording major segments of their lives. Did anyone think it strange that when the space shuttle Columbia broke up in the skies over East Texas, several people just happened to have video cameras pointing in the right direction at the right time? This will become more and more routine. How will you feel when half the people you meet are recording your every move? How will it change how you act?

One of the hallmarks of a Rochester education is its integration of research skills with the integrity and breadth of a traditional liberal education. Through your clusters—in many cases double majors—and your interactions with colleagues in the humanities and social sciences, you have learned that everything is connected. Technology itself is neither good nor evil, but there is almost always more than one way of doing something, and different technical choices can have very different social consequences. Don’t be afraid to take those consequences into consideration when making technical decisions, and don’t shy away from engaging in political debate. Your expertise will be needed.

Those of you graduating here today have the privilege of working in one of the most exciting periods of change in human history. No one in this room can imagine what computing will look like when you retire, but I know that you will help to shape it. The computers you’ve used here will be as obsolete 20 years from now as the VAX I used in grad school is today. But the skills and the basic principles you’ve learned—both within and beyond computer science—will stand you in good stead.

Congratulations to you all, and best wishes for your future endeavors.